整合Elastic-Job(支持动态任务)

最近公司的项目需要用到分布式任务调度,在结合xxl-job,elastic-job,quartz多款开源框架后决定使用当当的Elastic-job。不知道大家有没有这样的需求,就是动态任务。之前比较了xxl-job和elastic-job发现,都只是支持注解或者配置以及后台添加现有的任务,不支持动态添加。比如:类似订单半小时后自动取消的场景。

xxl-job理论上来说是可以支持的,但是需要高度整合admin端的程序,然后开放对应的接口才可以给其他服务调用,这样本质直接改源码对后期的升级十分不便,最后放弃了xxl-job。elastic-job在移交Apache后的版本规划中,有提到API的开放,但是目前还没有稳定版,所以只能使用之前的2.1.5的版本来做。在Github搜了很多整合方案,最后决定选择下面的来实现。

因为底层本质上还是用elastic-job的东西。下面引入elastic-job坐标

com.dangdang

elastic-job-lite-spring

2.1.5

com.dangdang

elastic-job-lite-lifecycle

2.1.5

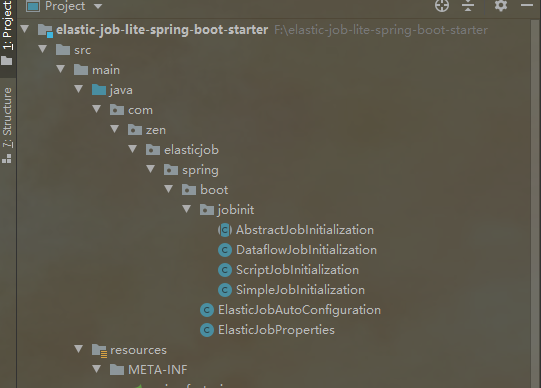

这是Github找到的集成方案,把代码clone下来,然后修改一下。github地址:https://github.com/xjzrc/elastic-job-lite-spring-boot-starter。因为要做的是动态的,所以这里没有直接使用maven坐标引入,直接将源码全部接入项目来使用并修改成自己想要的,这样比较灵活。项目采用的是SpringBoot,为简介代码,使用了Lombok。

先引入Zookeeper配置类

/**

* Zookeeper配置

* @author Nil

* @date 2020/9/14 11:24

*/

@Setter

@Getter

public class ZookeeperRegistryProperties {

/**

* 连接Zookeeper服务器的列表

* 包括IP地址和端口号

* 多个地址用逗号分隔

* 如: host1:2181,host2:2181

*/

private String serverLists;

/**

* Zookeeper的命名空间

*/

private String namespace;

/**

* 等待重试的间隔时间的初始值

* 单位:毫秒

*/

private int baseSleepTimeMilliseconds = 1000;

/**

* 等待重试的间隔时间的最大值

* 单位:毫秒

*/

private int maxSleepTimeMilliseconds = 3000;

/**

* 最大重试次数

*/

private int maxRetries = 3;

/**

* 连接超时时间

* 单位:毫秒

*/

private int connectionTimeoutMilliseconds = 15000;

/**

* 会话超时时间

* 单位:毫秒

*/

private int sessionTimeoutMilliseconds = 60000;

/**

* 连接Zookeeper的权限令牌

* 缺省为不需要权限验

*/

private String digest;

}引入Elastic-Job配置类,这个类主要是为了可以支持配置式的任务

/**

* 任务配置类

* @author Nil

* @date 2020/9/14 17:25

*/

@Getter

@Setter

@RefreshScope

@ConfigurationProperties(prefix = "spring.elasticjob")

public class ElasticJobProperties {

/**

* 注册中心

*/

private ZookeeperRegistryProperties zookeeper;

/**

* 简单作业配置(String为任务名)

*/

private Map simples = new LinkedHashMap<>(16);

/**

* 流式作业配置(String为任务名)

*/

private Map dataflows = new LinkedHashMap<>(16);

/**

* 脚本作业配置(String为任务名)

*/

private Map scripts = new LinkedHashMap<>(16);

@Getter

@Setter

public static class SimpleConfiguration extends JobConfiguration {

/**

* 作业类型

*/

private final JobType jobType = JobType.SIMPLE;

}

@Getter

@Setter

public static class ScriptConfiguration extends JobConfiguration {

/**

* 作业类型

*/

private final JobType jobType = JobType.SCRIPT;

/**

* 脚本型作业执行命令行

*/

private String scriptCommandLine;

}

@Getter

@Setter

public static class DataflowConfiguration extends JobConfiguration {

/**

* 作业类型

*/

private final JobType jobType = JobType.DATAFLOW;

/**

* 是否流式处理数据

* 如果流式处理数据, 则fetchData不返回空结果将持续执行作业

* 如果非流式处理数据, 则处理数据完成后作业结束

*/

private boolean streamingProcess = false;

}

@Getter

@Setter

public static class JobConfiguration {

/**

* 作业实现类,需实现ElasticJob接口

*/

private String jobClass;

/**

* 注册中心Bean的引用,需引用reg:zookeeper的声明

*/

private String registryCenterRef = ElasticJobAutoConfiguration.DEFAULT_REGISTRY_CENTER_NAME;

/**

* cron表达式,用于控制作业触发时间

*/

private String cron;

/**

* 作业分片总数

*/

private int shardingTotalCount = 1;

/**

* 分片序列号和参数用等号分隔,多个键值对用逗号分隔

* 分片序列号从0开始,不可大于或等于作业分片总数

* 如:0=a,1=b,2=c

*/

private String shardingItemParameters = "0=A";

/**

* 作业实例主键,同IP可运行实例主键不同, 但名称相同的多个作业实例

*/

private String jobInstanceId;

/**

* 作业自定义参数

* 作业自定义参数,可通过传递该参数为作业调度的业务方法传参,用于实现带参数的作业

* 例:每次获取的数据量、作业实例从数据库读取的主键等

*/

private String jobParameter;

/**

* 监控作业运行时状态

* 每次作业执行时间和间隔时间均非常短的情况,建议不监控作业运行时状态以提升效率。

* 因为是瞬时状态,所以无必要监控。请用户自行增加数据堆积监控。并且不能保证数据重复选取,应在作业中实现幂等性。

* 每次作业执行时间和间隔时间均较长的情况,建议监控作业运行时状态,可保证数据不会重复选取。

*/

private boolean monitorExecution = true;

/**

* 作业监控端口

* 建议配置作业监控端口, 方便开发者dump作业信息。

* 使用方法: echo “dump” | nc 127.0.0.1 9888

*/

private int monitorPort = -1;

/**

* 最大允许的本机与注册中心的时间误差秒数

* 如果时间误差超过配置秒数则作业启动时将抛异常

* 配置为-1表示不校验时间误差

*/

private int maxTimeDiffSeconds = -1;

/**

* 是否开启失效转移

*/

private boolean failover = false;

/**

* 是否开启错过任务重新执行

*/

private boolean misfire = true;

/**

* 作业分片策略实现类全路径

* 默认使用平均分配策略

* 详情参见:作业分片策略http://elasticjob.io/docs/elastic-job-lite/02-guide/job-sharding-strategy

*/

private String jobShardingStrategyClass;

/**

* 作业描述信息

*/

private String description;

/**

* 作业是否禁止启动

* 可用于部署作业时,先禁止启动,部署结束后统一启动

*/

private boolean disabled = false;

/**

* 本地配置是否可覆盖注册中心配置

* 如果可覆盖,每次启动作业都以本地配置为准

*/

private boolean overwrite = true;

/**

* 扩展异常处理类

*/

private String jobExceptionHandler = DefaultJobExceptionHandler.class.getCanonicalName();

/**

* 扩展作业处理线程池类

*/

private String executorServiceHandler = DefaultExecutorServiceHandler.class.getCanonicalName();

/**

* 修复作业服务器不一致状态服务调度间隔时间,配置为小于1的任意值表示不执行修复

* 单位:分钟

*/

private int reconcileIntervalMinutes = 10;

/**

* 作业事件追踪的数据源Bean引用

*/

private String eventTraceRdbDataSource = "dataSource";

/**

* 监听器

*/

private Listener listener;

@Getter

@Setter

public static class Listener {

/**

* 每台作业节点均执行的监听

* 若作业处理作业服务器的文件,处理完成后删除文件,可考虑使用每个节点均执行清理任务。

* 此类型任务实现简单,且无需考虑全局分布式任务是否完成,请尽量使用此类型监听器。

*

* 注意:类必须继承com.dangdang.ddframe.job.lite.api.listener.ElasticJobListener

*/

String listenerClass;

/**

* 分布式场景中仅单一节点执行的监听

* 若作业处理数据库数据,处理完成后只需一个节点完成数据清理任务即可。

* 此类型任务处理复杂,需同步分布式环境下作业的状态同步,提供了超时设置来避免作业不同步导致的死锁,请谨慎使用。

*

* 注意:类必须继承com.dangdang.ddframe.job.lite.api.listener.AbstractDistributeOnceElasticJobListener

*/

String distributedListenerClass;

/**

* 最后一个作业执行前的执行方法的超时时间

* 单位:毫秒

*/

Long startedTimeoutMilliseconds = Long.MAX_VALUE;

/**

* 最后一个作业执行后的执行方法的超时时间

* 单位:毫秒

*/

Long completedTimeoutMilliseconds = Long.MAX_VALUE;

}

}

} 创建自动配置类

/**

* elastic-job配置

* @author Nil

* @date 2020/9/14 11:24

*/

@Configuration

@RequiredArgsConstructor

@EnableConfigurationProperties(ElasticJobProperties.class)

public class ElasticJobAutoConfiguration {

public static final String DEFAULT_REGISTRY_CENTER_NAME = "elasticJobRegistryCenter";

private final ElasticJobProperties elasticJobProperties;

/**

* 初始化Zookeeper

* @return

*/

@Bean(name = DEFAULT_REGISTRY_CENTER_NAME, initMethod = "init")

@ConditionalOnMissingBean

public ZookeeperRegistryCenter regCenter() {

ZookeeperRegistryProperties zookeeperRegistryProperties = elasticJobProperties.getZookeeper();

ZookeeperConfiguration zookeeperConfiguration = new ZookeeperConfiguration(zookeeperRegistryProperties.getServerLists(), zookeeperRegistryProperties.getNamespace());

zookeeperConfiguration.setBaseSleepTimeMilliseconds(zookeeperRegistryProperties.getBaseSleepTimeMilliseconds());

zookeeperConfiguration.setConnectionTimeoutMilliseconds(zookeeperRegistryProperties.getConnectionTimeoutMilliseconds());

zookeeperConfiguration.setMaxSleepTimeMilliseconds(zookeeperRegistryProperties.getMaxSleepTimeMilliseconds());

zookeeperConfiguration.setSessionTimeoutMilliseconds(zookeeperRegistryProperties.getSessionTimeoutMilliseconds());

zookeeperConfiguration.setMaxRetries(zookeeperRegistryProperties.getMaxRetries());

zookeeperConfiguration.setDigest(zookeeperRegistryProperties.getDigest());

return new ZookeeperRegistryCenter(zookeeperConfiguration);

}

/**

* 初始化简单任务

* @return

*/

@Bean(initMethod = "init")

@ConditionalOnMissingBean

public SimpleJobInitialization simpleJobInitialization() {

return new SimpleJobInitialization(elasticJobProperties.getSimples());

}

/**

* 流式任务初始化

* @return

*/

@Bean(initMethod = "init")

@ConditionalOnMissingBean

public DataflowJobInitialization dataflowJobInitialization() {

return new DataflowJobInitialization(elasticJobProperties.getDataflows());

}

/**

* 脚本任务初始化

* @return

*/

@Bean(initMethod = "init")

@ConditionalOnMissingBean

@ConditionalOnBean(ZookeeperRegistryCenter.class)

public ScriptJobInitialization scriptJobInitialization() {

return new ScriptJobInitialization(elasticJobProperties.getScripts());

}

/**

* 动态任务初始化

* @return

*/

@Bean(initMethod = "init")

@ConditionalOnMissingBean

public DynamicJobInitialization dynamicJobInitialization() {

return new DynamicJobInitialization(this.regCenter());

}

}AbstractJobInitialization核心基类,主要用作初始化任务的操作

/**

* 任务初始化抽象类

* @author Nil

* @date 2020/9/14 19:24

*/

public abstract class AbstractJobInitialization implements ApplicationContextAware {

protected ApplicationContext applicationContext;

@Override

public void setApplicationContext(ApplicationContext applicationContext) {

this.applicationContext = applicationContext;

}

/**

* 初始化任务

* @param jobName 任务名

* @param jobType 任务类型

* @param configuration 配置

*/

protected void initJob(String jobName, JobType jobType, ElasticJobProperties.JobConfiguration configuration) {

//向spring容器中注册作业任务

ElasticJob elasticJob = registerElasticJob(jobName, configuration.getJobClass(), jobType);

//获取注册中心

ZookeeperRegistryCenter regCenter = getZookeeperRegistryCenter(configuration.getRegistryCenterRef());

//构建核心配置

JobCoreConfiguration jobCoreConfiguration = getJobCoreConfiguration(jobName, configuration);

//获取作业类型配置

JobTypeConfiguration jobTypeConfiguration = getJobTypeConfiguration(jobName, jobType, jobCoreConfiguration);

//获取Lite作业配置

LiteJobConfiguration liteJobConfiguration = getLiteJobConfiguration(jobTypeConfiguration, configuration);

//获取作业事件追踪的数据源配置

JobEventRdbConfiguration jobEventRdbConfiguration = getJobEventRdbConfiguration(configuration.getEventTraceRdbDataSource());

//获取作业监听器

ElasticJobListener[] elasticJobListeners = creatElasticJobListeners(configuration.getListener());

//注册作业

if (null == jobEventRdbConfiguration) {

new SpringJobScheduler(elasticJob, regCenter, liteJobConfiguration, elasticJobListeners).init();

} else {

new SpringJobScheduler(elasticJob, regCenter, liteJobConfiguration, jobEventRdbConfiguration, elasticJobListeners).init();

}

}

/**

* 获取作业类型配置

* @param jobName 任务名称

* @param jobType 任务类型

* @param jobCoreConfiguration 任务核心配置

* @return JobTypeConfiguration

*/

public abstract JobTypeConfiguration getJobTypeConfiguration(String jobName, JobType jobType, JobCoreConfiguration jobCoreConfiguration);

/**

* 获取作业任务实例

* @param jobName 任务名称

* @param jobType 任务类型

* @param strClass 任务类全路径

* @return ElasticJob

*/

private ElasticJob registerElasticJob(String jobName, String strClass, JobType jobType) {

switch (jobType) {

case SIMPLE:

return registerBean(jobName, strClass, SimpleJob.class);

case DATAFLOW:

return registerBean(jobName, strClass, DataflowJob.class);

default:

return null;

}

}

/**

* 获取注册中心

* @param registryCenterRef 注册中心引用

* @return ZookeeperRegistryCenter

*/

protected ZookeeperRegistryCenter getZookeeperRegistryCenter(String registryCenterRef) {

if (StringUtils.isBlank(registryCenterRef)) {

registryCenterRef = ElasticJobAutoConfiguration.DEFAULT_REGISTRY_CENTER_NAME;

}

if (!applicationContext.containsBean(registryCenterRef)) {

throw new ServiceException("not exist ZookeeperRegistryCenter [" + registryCenterRef + "] !");

}

return applicationContext.getBean(registryCenterRef, ZookeeperRegistryCenter.class);

}

/**

* 获取作业事件追踪的数据源配置

* @param eventTraceRdbDataSource 作业事件追踪的数据源Bean引用

* @return JobEventRdbConfiguration

*/

private JobEventRdbConfiguration getJobEventRdbConfiguration(String eventTraceRdbDataSource) {

if (StringUtils.isBlank(eventTraceRdbDataSource)) {

return null;

}

if (!applicationContext.containsBean(eventTraceRdbDataSource)) {

throw new ServiceException("not exist datasource [" + eventTraceRdbDataSource + "] !");

}

DataSource dataSource = (DataSource) applicationContext.getBean(eventTraceRdbDataSource);

return new JobEventRdbConfiguration(dataSource);

}

/**

* 构建Lite作业

* @param jobTypeConfiguration 任务类型

* @param jobConfiguration 任务配置

* @return LiteJobConfiguration

*/

private LiteJobConfiguration getLiteJobConfiguration(JobTypeConfiguration jobTypeConfiguration, ElasticJobProperties.JobConfiguration jobConfiguration) {

//构建Lite作业

return LiteJobConfiguration.newBuilder(Objects.requireNonNull(jobTypeConfiguration))

.monitorExecution(jobConfiguration.isMonitorExecution())

.monitorPort(jobConfiguration.getMonitorPort())

.maxTimeDiffSeconds(jobConfiguration.getMaxTimeDiffSeconds())

.jobShardingStrategyClass(jobConfiguration.getJobShardingStrategyClass())

.reconcileIntervalMinutes(jobConfiguration.getReconcileIntervalMinutes())

.disabled(jobConfiguration.isDisabled())

.overwrite(jobConfiguration.isOverwrite()).build();

}

/**

* 构建任务核心配置

* @param jobName 任务执行名称

* @param jobConfiguration 任务配置

* @return JobCoreConfiguration

*/

protected JobCoreConfiguration getJobCoreConfiguration(String jobName, ElasticJobProperties.JobConfiguration jobConfiguration) {

JobCoreConfiguration.Builder builder = JobCoreConfiguration.newBuilder(jobName, jobConfiguration.getCron(), jobConfiguration.getShardingTotalCount())

.shardingItemParameters(jobConfiguration.getShardingItemParameters())

.jobParameter(jobConfiguration.getJobParameter())

.failover(jobConfiguration.isFailover())

.misfire(jobConfiguration.isMisfire())

.description(jobConfiguration.getDescription());

if (StringUtils.isNotBlank(jobConfiguration.getJobExceptionHandler())) {

builder.jobProperties(JobProperties.JobPropertiesEnum.JOB_EXCEPTION_HANDLER.getKey(), jobConfiguration.getJobExceptionHandler());

}

if (StringUtils.isNotBlank(jobConfiguration.getExecutorServiceHandler())) {

builder.jobProperties(JobProperties.JobPropertiesEnum.EXECUTOR_SERVICE_HANDLER.getKey(), jobConfiguration.getExecutorServiceHandler());

}

return builder.build();

}

/**

* 获取监听器

* @param listener 监听器配置

* @return ElasticJobListener[]

*/

private ElasticJobListener[] creatElasticJobListeners(ElasticJobProperties.JobConfiguration.Listener listener) {

if (null == listener) {

return new ElasticJobListener[0];

}

List elasticJobListeners = new ArrayList<>(16);

//注册每台作业节点均执行的监听

ElasticJobListener elasticJobListener = registerBean(listener.getListenerClass(), listener.getListenerClass(), ElasticJobListener.class);

if (null != elasticJobListener) {

elasticJobListeners.add(elasticJobListener);

}

//注册分布式监听者

AbstractDistributeOnceElasticJobListener distributedListener = registerBean(listener.getDistributedListenerClass(), listener.getDistributedListenerClass(),

AbstractDistributeOnceElasticJobListener.class, listener.getStartedTimeoutMilliseconds(), listener.getCompletedTimeoutMilliseconds());

if (null != distributedListener) {

elasticJobListeners.add(distributedListener);

}

if (CollUtil.isEmpty(elasticJobListeners)) {

return new ElasticJobListener[0];

}

//集合转数组

ElasticJobListener[] elasticJobListenerArray = new ElasticJobListener[elasticJobListeners.size()];

for (int i = 0; i < elasticJobListeners.size(); i++) {

elasticJobListenerArray[i] = elasticJobListeners.get(i);

}

return elasticJobListenerArray;

}

/**

* 向spring容器中注册bean

* @param beanName bean名字

* @param strClass 类全路径

* @param tClass 类类型

* @param constructorArgValue 构造函数参数

* @param 泛型

* @return T

*/

protected T registerBean(String beanName, String strClass, Class tClass, Object... constructorArgValue) {

//判断是否配置了监听者

if (StringUtils.isBlank(strClass)) {

return null;

}

if (StringUtils.isBlank(beanName)) {

beanName = strClass;

}

//判断监听者是否已经在spring容器中存在

if (applicationContext.containsBean(beanName)) {

return applicationContext.getBean(beanName, tClass);

}

//不存在则创建并注册到Spring容器中

BeanDefinitionBuilder beanDefinitionBuilder = BeanDefinitionBuilder.rootBeanDefinition(strClass);

beanDefinitionBuilder.setScope(BeanDefinition.SCOPE_PROTOTYPE);

//设置参数

for (Object argValue : constructorArgValue) {

beanDefinitionBuilder.addConstructorArgValue(argValue);

}

getDefaultListableBeanFactory().registerBeanDefinition(beanName, beanDefinitionBuilder.getBeanDefinition());

return applicationContext.getBean(beanName, tClass);

}

/**

* 获取beanFactory

* @return DefaultListableBeanFactory

*/

private DefaultListableBeanFactory getDefaultListableBeanFactory() {

return (DefaultListableBeanFactory) ((ConfigurableApplicationContext) applicationContext).getBeanFactory();

}

} 分别初始化简单任务,流式任务,脚本任务

/**

* 流式任务初始化

* @author Nil

* @date 2020/9/14 19:15

*/

public class DataflowJobInitialization extends AbstractJobInitialization {

private Map dataflowConfigurationMap;

public DataflowJobInitialization(Map dataflowConfigurationMap) {

this.dataflowConfigurationMap = dataflowConfigurationMap;

}

public void init() {

if (CollUtil.isNotEmpty(dataflowConfigurationMap)) {

dataflowConfigurationMap.forEach((k, v) -> {

ElasticJobProperties.DataflowConfiguration configuration = dataflowConfigurationMap.get(k);

super.initJob(k, configuration.getJobType(), configuration);

});

}

}

@Override

public JobTypeConfiguration getJobTypeConfiguration(String jobName, JobType jobType, JobCoreConfiguration jobCoreConfiguration) {

ElasticJobProperties.DataflowConfiguration configuration = dataflowConfigurationMap.get(jobName);

return new DataflowJobConfiguration(jobCoreConfiguration, configuration.getJobClass(), configuration.isStreamingProcess());

}

} /**

* 简单任务初始化

* @author Nil

* @date 2020/9/14 19:14

*/

public class SimpleJobInitialization extends AbstractJobInitialization {

private Map simpleConfigurationMap;

public SimpleJobInitialization(Map simpleConfigurationMap) {

this.simpleConfigurationMap = simpleConfigurationMap;

}

public void init() {

if (CollUtil.isNotEmpty(simpleConfigurationMap)) {

simpleConfigurationMap.forEach((k, v) -> {

ElasticJobProperties.SimpleConfiguration configuration = simpleConfigurationMap.get(k);

super.initJob(k, configuration.getJobType(), configuration);

});

}

}

@Override

public JobTypeConfiguration getJobTypeConfiguration(String jobName, JobType jobType, JobCoreConfiguration jobCoreConfiguration) {

ElasticJobProperties.SimpleConfiguration configuration = simpleConfigurationMap.get(jobName);

return new SimpleJobConfiguration(jobCoreConfiguration, configuration.getJobClass());

}

} /**

* 脚本任务初始化

* @author Nil

* @date 2020/9/14 20:45

*/

public class ScriptJobInitialization extends AbstractJobInitialization {

private Map scriptConfigurationMap;

public ScriptJobInitialization(final Map scriptConfigurationMap) {

this.scriptConfigurationMap = scriptConfigurationMap;

}

public void init() {

if (CollUtil.isNotEmpty(scriptConfigurationMap)) {

scriptConfigurationMap.forEach((k, v) -> {

ElasticJobProperties.ScriptConfiguration configuration = scriptConfigurationMap.get(k);

super.initJob(k, configuration.getJobType(), configuration);

});

}

}

@Override

public JobTypeConfiguration getJobTypeConfiguration(String jobName, JobType jobType, JobCoreConfiguration jobCoreConfiguration) {

ElasticJobProperties.ScriptConfiguration configuration = scriptConfigurationMap.get(jobName);

return new ScriptJobConfiguration(jobCoreConfiguration, configuration.getScriptCommandLine());

}

} 下面就是核心部分,用来做动态任务的添加修改和删除,同时支持自动启动初始化任务,就可以满足大部分场景了。代码如下

/**

* 动态任务初始化(支持简单、流式任务)

* @author Nil

* @date 2020/9/14 19:22

*/

@Slf4j

public class DynamicJobInitialization extends AbstractJobInitialization {

private JobStatisticsAPI jobStatisticsAPI;

private JobSettingsAPI jobSettingsAPI;

public DynamicJobInitialization(ZookeeperRegistryCenter zookeeperRegistryCenter) {

this.jobStatisticsAPI = new JobStatisticsAPIImpl(zookeeperRegistryCenter);

this.jobSettingsAPI = new JobSettingsAPIImpl(zookeeperRegistryCenter);

}

public void init() {

Collection allJob = jobStatisticsAPI.getAllJobsBriefInfo();

if (CollUtil.isNotEmpty(allJob)) {

allJob.forEach(jobInfo -> {

// 已下线的任务

if (JobBriefInfo.JobStatus.CRASHED.equals(jobInfo.getStatus())) {

try {

Date currentDate = new Date();

CronExpression cronExpression = new CronExpression(jobInfo.getCron());

Date nextValidTimeAfter = cronExpression.getNextValidTimeAfter(currentDate);

// 表达式还生效的任务

if (ObjectUtil.isNotNull(nextValidTimeAfter)) {

this.initJobHandler(jobInfo.getJobName());

}

} catch (ParseException e) {

log.error(e.getMessage(), e);

}

}

});

}

}

/**

* 初始化任务操作

* @param jobName 任务名

*/

private void initJobHandler(String jobName) {

try {

JobSettings jobSetting = jobSettingsAPI.getJobSettings(jobName);

if (ObjectUtil.isNotNull(jobSetting)) {

String jobCode = StrUtil.subBefore(jobSetting.getJobName(), StrUtil.UNDERLINE, false);

JobClassEnum jobClassEnum = JobClassEnum.convert(jobCode);

if (ObjectUtil.isNotNull(jobClassEnum)) {

ElasticJobProperties.JobConfiguration configuration = new ElasticJobProperties.JobConfiguration();

configuration.setCron(jobSetting.getCron());

configuration.setJobParameter(jobSetting.getJobParameter());

configuration.setShardingTotalCount(jobSetting.getShardingTotalCount());

configuration.setDescription(jobSetting.getDescription());

configuration.setShardingItemParameters(jobSetting.getShardingItemParameters());

configuration.setJobClass(jobClassEnum.getClazz().getCanonicalName());

super.initJob(jobName, JobType.valueOf(jobSetting.getJobType()), configuration);

}

}

} catch (Exception e) {

log.error("初始化任务操作失败: {}", e.getMessage(), e);

}

}

/**

* 保存/更新任务

* @param job

* @param jobClass

*/

public void addOrUpdateJob(Job job, Class jobClass) {

ElasticJobProperties.JobConfiguration configuration = new ElasticJobProperties.JobConfiguration();

configuration.setCron(job.getCron());

configuration.setJobParameter(job.getJobParameter());

configuration.setShardingTotalCount(job.getShardingTotalCount());

configuration.setShardingItemParameters(job.getShardingItemParameters());

configuration.setJobClass(jobClass.getCanonicalName());

super.initJob(job.getJobName(), JobType.valueOf(job.getJobType()), configuration);

}

@Override

public JobTypeConfiguration getJobTypeConfiguration(String jobName, JobType jobType, JobCoreConfiguration jobCoreConfiguration) {

String jobCode = StrUtil.subBefore(jobName, StrUtil.UNDERLINE, false);

JobClassEnum jobClassEnum = JobClassEnum.convert(jobCode);

if (ObjectUtil.isNotNull(jobClassEnum)) {

if (JobType.SIMPLE.equals(jobType)) {

return new SimpleJobConfiguration(jobCoreConfiguration, jobClassEnum.getClazz().getCanonicalName());

} else if (JobType.DATAFLOW.equals(jobType)) {

return new DataflowJobConfiguration(jobCoreConfiguration, jobClassEnum.getClazz().getCanonicalName(), false);

}

}

return null;

}

}

在ElasticJobAutoConfiguration类中初始化动态任务

/**

* 动态任务初始化

* @return

*/

@Bean(initMethod = "init")

@ConditionalOnMissingBean

public DynamicJobInitialization dynamicJobInitialization() {

return new DynamicJobInitialization(this.regCenter());

}为什么是这样的实现?我发现每次重新发布服务后,现在的未执行的任务都会变成“已下线”,这可能跟Zookeeper有关,需要重新初始化才行,对于注解和配置式的,会自动初始化,但是动态添加的不会自动初始化。所以必须自己初始化,之前有个思路是自己建张表来维护定时,每次启动时进行初始化,但是这样太麻烦,后来实现使用elastic-job现有的API来实现,即启动时,遍历Zookeeper已有的节点,然后判断Cron表达式是否过期,如果还没有过期,则重新初始化任务,初始化时配置设置了会覆盖原来的配置,所以不会有影响。然后外层可以通过MQ来新增任务,在通过服务调用去指定对应的定时逻辑即可。

(不知道大家有没有更好的实现方案,可以初始化动态任务的)

而配置式的,可以直接在配置文件指定并实现即可

spring:

elasticjob:

#注册中心配置

zookeeper:

server-lists: 127.0.0.1:6181

namespace: elastic-job-spring-boot-stater-demo

#简单作业配置

simples:

#spring简单作业示例配置

spring-simple-job:

#配置简单作业,必须实现com.dangdang.ddframe.job.api.simple.SimpleJob

job-class: com.zen.spring.boot.demo.elasticjob.job.SpringSimpleJob

cron: 0/2 * * * * ?

sharding-total-count: 3

sharding-item-parameters: 0=Beijing,1=Shanghai,2=Guangzhou

#配置监听器

listener:

#配置每台作业节点均执行的监听,必须实现com.dangdang.ddframe.job.lite.api.listener.ElasticJobListener

listener-class: com.zen.spring.boot.demo.elasticjob.listener.MyElasticJobListener

#流式作业配置

dataflows:

#spring简单作业示例配置

spring-dataflow-job:

#配置简单作业,必须实现com.dangdang.ddframe.job.api.dataflow.DataflowJob

job-class: com.zen.spring.boot.demo.elasticjob.job.SpringDataflowJob

cron: 0/2 * * * * ?

sharding-total-count: 3

sharding-item-parameters: 0=Beijing,1=Shanghai,2=Guangzhou

streaming-process: true

#配置监听器

listener:

#配置分布式场景中仅单一节点执行的监听,必须实现com.dangdang.ddframe.job.lite.api.listener.AbstractDistributeOnceElasticJobListener

distributed-listener-class: com.zen.spring.boot.demo.elasticjob.listener.MyDistributeElasticJobListener

started-timeout-milliseconds: 5000

completed-timeout-milliseconds: 10000

#脚本作业配置

scripts:

#脚本作业示例配置

script-job:

cron: 0/2 * * * * ?

sharding-total-count: 3

sharding-item-parameters: 0=Beijing,1=Shanghai,2=Guangzhou

script-command-line: youPath/spring-boot-starter-demo/elastic-job-spring-boot-starter-demo/src/main/resources/script/demo.bat 以上整合基本可以满足现在的使用,如果大家有更好的整合方案也可以一起交流一下。比较期待移交Apache后的3的版本,这样可以有更多API的支持,而不用自己造轮子。