SpringCloud微服务日志经kafka缓冲写入到ELK

准备工作

有了上面的准备之后即可完整的将日志写入kafka,经kafka缓冲后异步写入elk中。

启动kafka

启动zookeeper新版本中将不再依赖外部的zookeeper

$ bin/zookeeper-server-start.sh config/zookeeper.properties

启动kafka

$ bin/kafka-server-start.sh config/server.properties

创建一个Topic

$ bin/kafka-topics.sh --create --topic logger-channel --bootstrap-server localhost:9092

启动elasticsearch

bin/elasticsearch

启动kibana

bin/kibana

启动logstash

编写配置文件kafka.conf

# Sample Logstash configuration for creating a simple

# Beats -> Logstash -> Elasticsearch pipeline.

input {

kafka {

id => "my_plugin_id"

bootstrap_servers => "localhost:9092"

topics => ["logger-channel"]

auto_offset_reset => "latest"

}

}

filter {

#json

json {

source => "message"

}

date {

match => ["time", "yyyy-MM-dd HH:mm:ss.SSS"]

remove_field => ["time"]

}

}

output {

#stdout {}

elasticsearch {

hosts => ["http://localhost:9200"]

index => "logs-%{+YYYY.MM.dd}"

#user => "elastic"

#password => "changeme"

}

}

启动服务

./bin/logstash -f ./config/kafka.conf --config.reload.automatic

配置SpringBoot服务日志文件

logback-spring.xml

kafka-log-test

%d{HH:mm:ss.SSS} %contextName [%thread] %-5level %logger{36} - %msg%n

${logDir}/${logName}.log

${logDir}/history/${myspringboottest_log}.%d{yyyy-MM-dd}.rar

30

%d{HH:mm:ss.SSS} %contextName [%thread] %-5level %logger{36} - %msg%n

false

true

{"appName":"${applicationName}","env":"${profileActive}"}

UTF-8

logger-channel

bootstrap.servers=localhost:9092

true

true

0

2048

启动springBoot服务,打印任意日志。

kibana查看日志

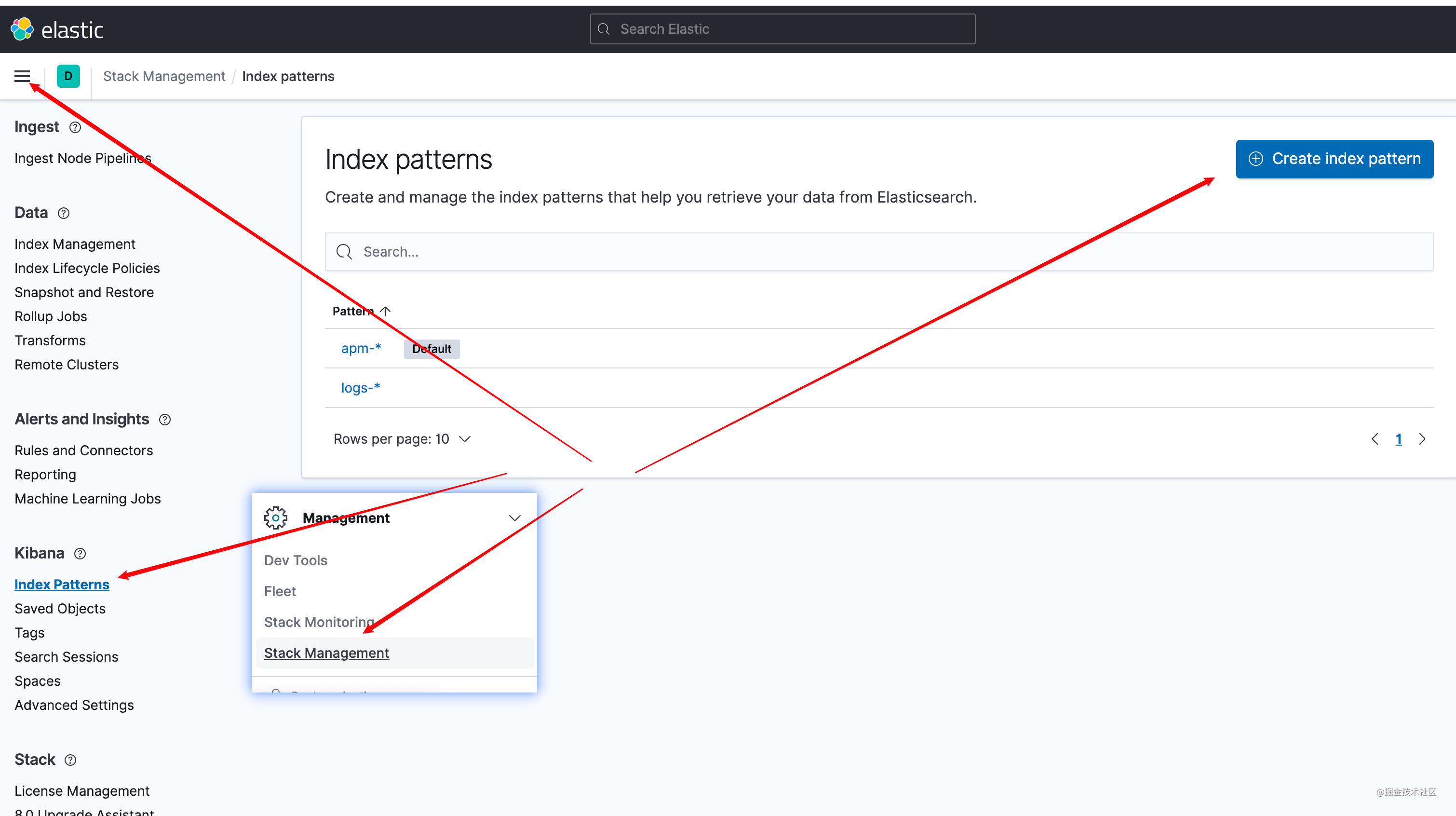

从菜单找到stack Management>>index patterns>> create index pattern 创建索引

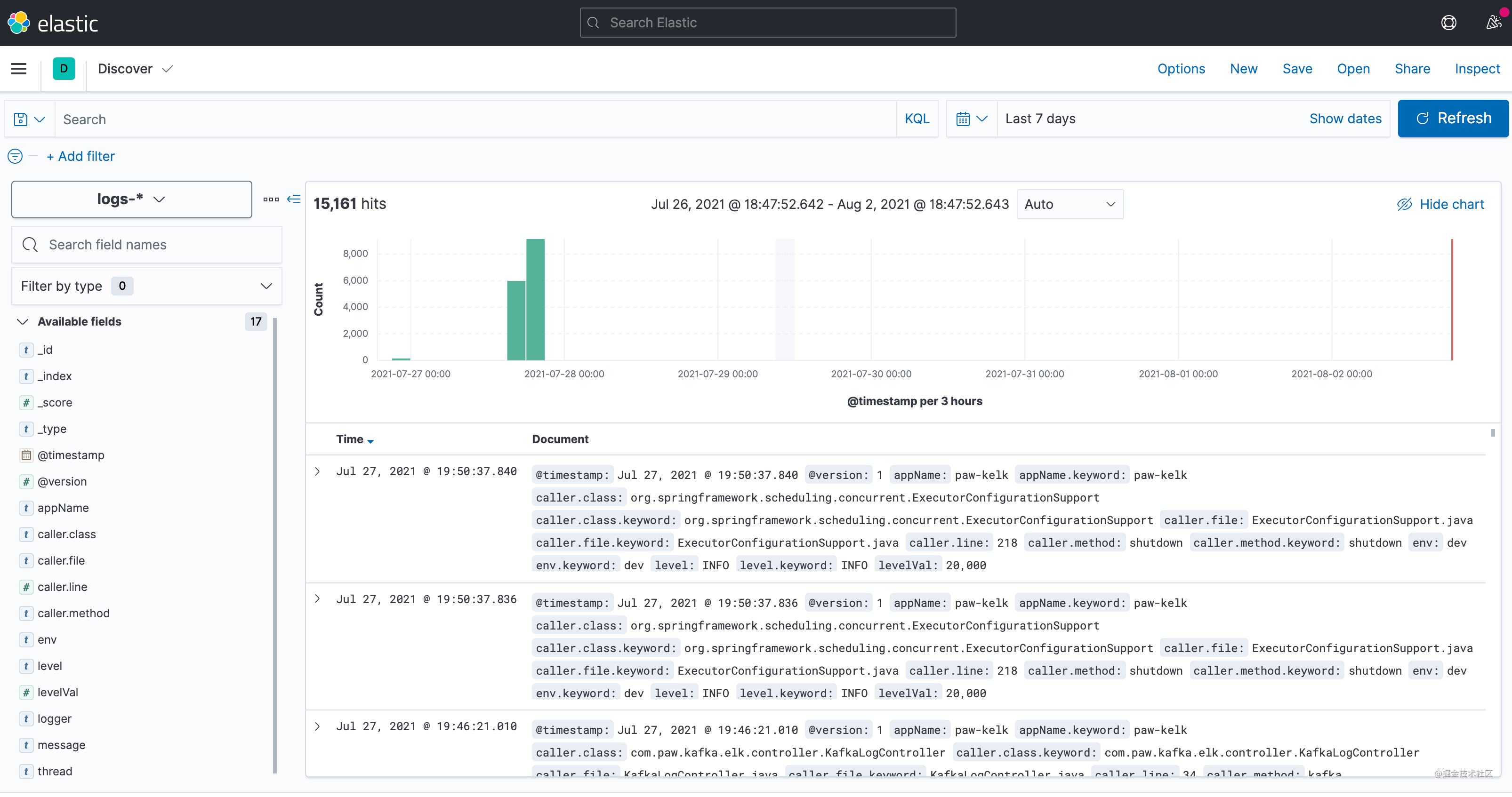

菜单discover >> 选择刚创建的索引 即可查看日志了

至此SpringCloud微服务日志写入到kafka-elk完成。