Elasticsearch 近实时搜索 Near Real-Time Search

Elasticsearch 近实时搜索 Near Real-Time Search(refresh),内容来自 B 站中华石杉 Elasticsearch 顶尖高手系列课程核心知识篇,英文内容来自 Elasticsearch: The Definitive Guide [2.x]

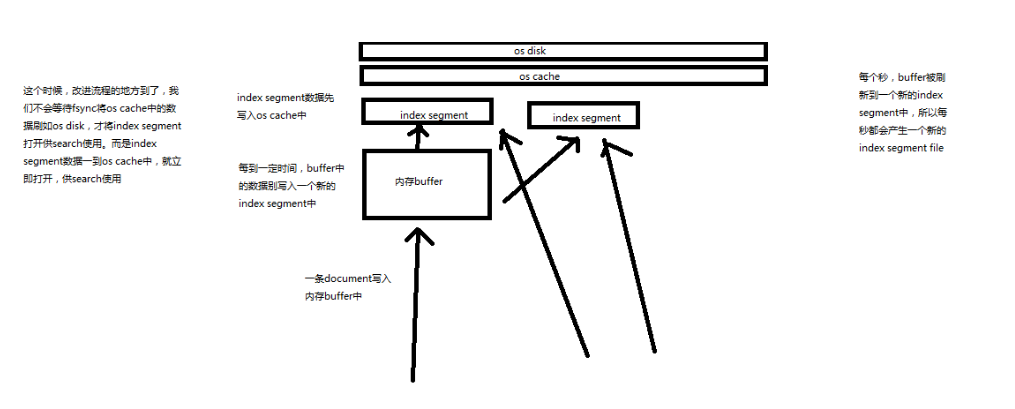

优化写入流程实现NRT近实时

现有流程的问题,每次都必须等待 fsync 将 segment 刷入磁盘,才能将 segment 打开供 search 使用,这样的话,从一个 document 写入,到它可以被搜索,可能会超过1分钟,这就不是近实时的搜索了!主要瓶颈在于 fsync 时磁盘 IO 写数据进磁盘,是很耗时的。

原有流程

这个时候,改进流程的地方到了,ES 不会等待 fsync 将 OS Cache 中的数据刷入 OS Disk,才将 index segment 打开供 search 使用,而是 index segment 数据一到 OS Cache 中,就立即打开,供 search 使用。

每秒,buffer 被刷新到一个新的 index segment 中,所以每秒都会产生一个新的 index segment file

写入流程别改进如下

数据写入os cache,并被打开供搜索的过程,叫做refresh,默认是每隔1秒refresh一次。也就是说,每隔一秒就会将buffer中的数据写入一个新的index segment file,先写入os cache中。所以,es是近实时的,数据写入到可以被搜索,默认是1秒。

可以手动 refresh

POST /my_index/_refresh一般不需要手动执行,没必要,让es自己搞就可以了

比如说,我们现在的时效性要求,比较低,只要求一条数据写入es,一分钟以后才让我们搜索到就可以了,那么就可以调整 refresh interval

PUT /my_index

{

"settings": {

"refresh_interval": "30s"

}

}那么,问题来了。Commit 操作在哪里?且听下回分解。

近实时搜索

Committing a new segment to disk requires an fsync to ensure that the segment is physically written to disk and that data will not be lost if there is a power failure. But an fsync is costly, ...

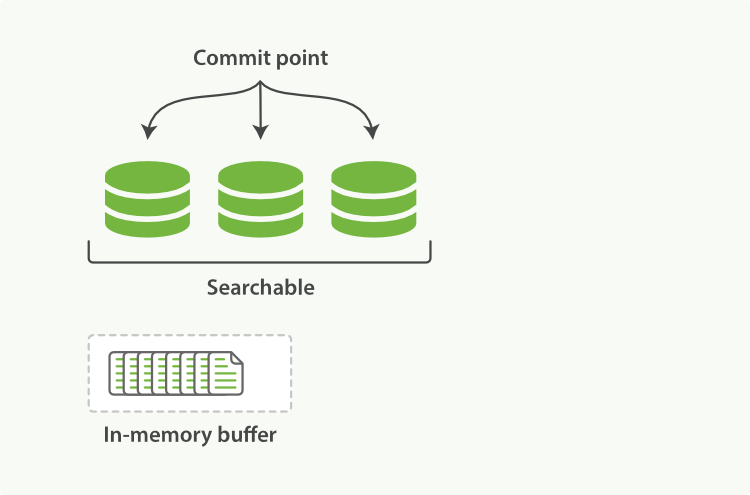

A Lucene index with new documents in the in-memory buffer

documents in the in-memory indexing buffer are written to a new segment. The new segment is written to the filesystem cache first - which is cheap - and only later is it flushed to disk - which is expensive. But once a file is in the cache, it can be opened and read, just like any other file.

Lucene allows new segments to be written and opened - making the documents they contain visible to search - without performing a full commit.

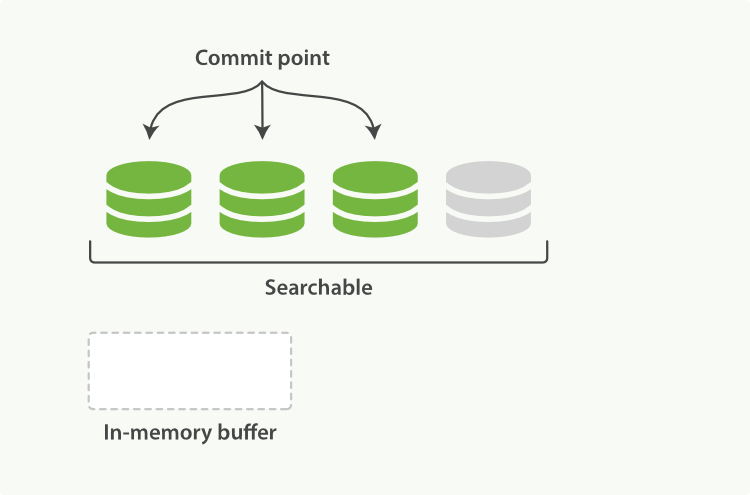

The buffer contents have been written to a segment, which is searchable, but is not yet committed

refresh API

In Elasticsearch, this lightweight process of writing and opening a new segment is called a refresh. By default, every shard is refreshed automatically once every second. This is why we say that Elasticsearch has near real-time search: document changes are not visible to search immediately, but will become visible within 1 second.

// Refresh all indices

POST /_refresh

// Refresh just the blogs index

POST /blogs/_refreshWhile a refresh is much lighter than a commit, it still has a performance cost.

Not all use cases require a refresh every second... You can reduce the frequency of refreshes on a per-index basis by setting the refresh_interval:

PUT /my_logs

{

"settings": {

// Refresh the my_logs index every 30 seconds

"refresh_interval": "30s"

}

}The refresh_interval can be updated dynamically on an existing index.

// Disable automatic refreshes

PUT /my_logs/_settings

{ "refresh_interval": -1 }

// Refresh automatically every second

PUT /my_logs/_settings

{ "refresh_interval": "1s" }The refresh_interval expects a duration such as 1s (1 second) or 2m (2 minutes). An absolute number like 1 means 1 millisecond - a sure way to bring your cluster to its knees.