容器 & 服务: 扩容(二)

系列文章:

一 metrics-server错误信息

在helm安装metrics-server后,可能还是会报错,以下以遇到的实例,分享问题分析和解决过程。

1.1 问题描述

1.2 pod信息

lijingyong:container flamingskys$ kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-f9fd979d6-4xqfp 1/1 Running 3 27d

coredns-f9fd979d6-555sx 1/1 Running 3 27d

etcd-docker-desktop 1/1 Running 3 27d

kube-apiserver-docker-desktop 1/1 Running 8 27d

kube-controller-manager-docker-desktop 1/1 Running 3 27d

kube-proxy-h26lk 1/1 Running 3 27d

kube-scheduler-docker-desktop 1/1 Running 19 27d

metrics-server-6bdfb6d589-x79mr 0/1 CrashLoopBackOff 385 40h

storage-provisioner 1/1 Running 24 27d

vpnkit-controller 1/1 Running 3 27d很明显的问题,metrics-server-6bdfb6d589-x79mr 这个pod当前是CrashLoopBackOff状态,继续查看pod问题详情。

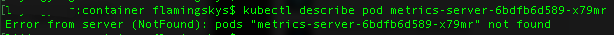

注:直接使用 kubectl describe pod metrics-server-6bdfb6d589-x79mr 是不行的,需要指明namespace,否则会报找不到pod的错误:

1.3 pod日志

kubectl describe pod -n kube-system metrics-server-6bdfb6d589-x79mr 输出内容如下:

Name: metrics-server-6bdfb6d589-x79mr

Namespace: kube-system

Priority: 0

Node: docker-desktop/192.168.65.4

Start Time: Sat, 24 Apr 2021 23:24:03 +0800

Labels: app.kubernetes.io/instance=metrics-server

app.kubernetes.io/managed-by=Helm

app.kubernetes.io/name=metrics-server

helm.sh/chart=metrics-server-5.8.5

pod-template-hash=6bdfb6d589

Annotations:

Status: Running

IP: 10.1.0.18

IPs:

IP: 10.1.0.18

Controlled By: ReplicaSet/metrics-server-6bdfb6d589

Containers:

metrics-server:

Container ID: docker://bbfc367728248cf676f084eb526bec02b2a0dfb6e8c1e4d6dd463a85c111cdfe

Image: docker.io/bitnami/metrics-server:0.4.3-debian-10-r0

Image ID: docker-pullable://bitnami/metrics-server@sha256:48c75855698863a4968bd971b61c14fe84a4516e9ddf91373cf613c6862ed620

Port: 8443/TCP

Host Port: 0/TCP

Command:

metrics-server

Args:

--secure-port=8443

State: Waiting

Reason: CrashLoopBackOff

Last State: Terminated

Reason: Completed

Exit Code: 0

Started: Mon, 26 Apr 2021 15:43:40 +0800

Finished: Mon, 26 Apr 2021 15:44:09 +0800

Ready: False

Restart Count: 389

Liveness: http-get https://:https/livez delay=0s timeout=1s period=10s #success=1 #failure=3

Readiness: http-get https://:https/readyz delay=0s timeout=1s period=10s #success=1 #failure=3

Environment:

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from metrics-server-token-78x8d (ro)

Conditions:

Type Status

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

metrics-server-token-78x8d:

Type: Secret (a volume populated by a Secret)

SecretName: metrics-server-token-78x8d

Optional: false

QoS Class: BestEffort

Node-Selectors:

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Pulled 44m (x374 over 29h) kubelet Container image "docker.io/bitnami/metrics-server:0.4.3-debian-10-r0" already present on machine

Warning Unhealthy 19m (x1124 over 29h) kubelet Liveness probe failed: HTTP probe failed with statuscode: 500

Warning BackOff 4m8s (x4698 over 29h) kubelet Back-off restarting failed container

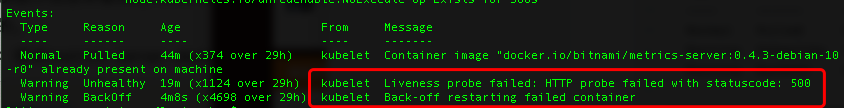

上面是我pod日志的完整内容,定位到最后,可以看到错误原因:

二 Liveness probe

2.1 k8s的健康检查

Kubernetes在每个Node节点上都有 ,Container Probe 也就是容器的健康检查是由 定期执行的。

在创建Pod时,可以通过和两种方式来探测Pod内容器的运行情况。可以用来检查容器内应用的存活的情况来,如果检查失败会杀掉容器进程,是否重启容器则取决于Pod的重启策略。检查容器内的应用是否能够正常对外提供服务,如果探测失败,则Endpoint Controller会将这个Pod的IP从服务中删除。

2.2 Liveness probe failed

从Events的消息,可以确认是健康检查时报了500错误,但我们还需要更详细的信息。

但从整个pod日志中,看不到更多消息,所以只能另寻出路。

三 metrics-server

回到我们的最初目的,是为了安装metrics-server。那么直接到它的github:。按照安装说明操作,在git的Installation部分。命令:

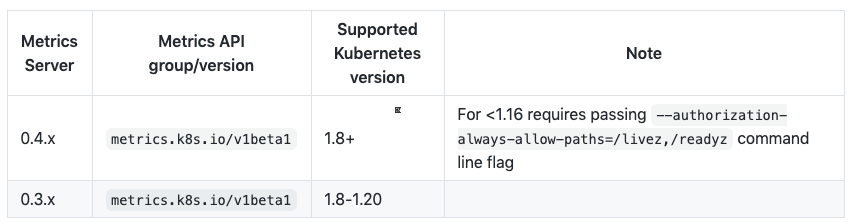

kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml注意,在安装说明中有一个提示:

兼容性说明:

那么看来应该就是这里的问题了,我们修改上面components.yaml调整后即可。