Hadoop实战篇-集群版(2)

Hadoop实战篇(2)

作者 | WenasWei

前言

在上一篇的Hadoop实战篇介绍过了Hadoop-离线批处理技术的本地模式和伪集群模式安装,接下来继续学习 Hadoop 集群模式安装; 将从以下几点介绍:

Linux 主机部署规划

Zookeeper 注册中心安装

集群模式安装

Hadoop 的目录结构说明和命令帮助文档

集群动态增加和删除节点

一 Linux环境的配置与安装Hadoop

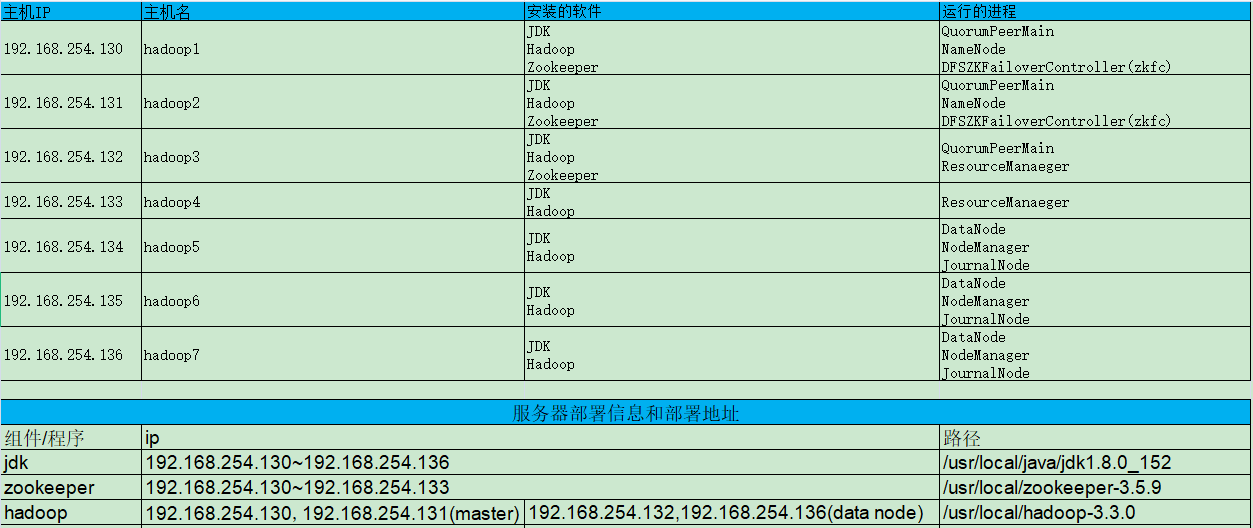

Hadoop集群部署规划:

Hadoop需要使用到 Linux 环境上的一些基本的配置需要,Hadoop 用户组和用户添加,免密登录操作,JDK安装

1.1 VMWare中Ubuntu网络配置

在使用 VMWare 安装 Ubuntu18.04-Linux 操作系统下时产生系统配置问题可以通过分享的博文进行配置,CSDN跳转链接:

其中包含了以下几个重要操作步骤:

Ubuntu系统信息与修改主机名

Windows设置VMWare的NAT网络

Linux网关设置与配置静态IP

Linux修改hosts文件

Linux免密码登录

1.2 Hadoop 用户组和用户添加

1.2.1 添加Hadoop用户组和用户

以 root 用户登录 Linux-Ubuntu 18.04虚拟机,执行命令:

$ groupadd hadoop

$ useradd -r -g hadoop hadoop

1.2.2 赋予Hadoop用户目录权限

将 目录权限赋予 Hadoop 用户, 命令如下:

$ chown -R hadoop.hadoop /usr/local/

$ chown -R hadoop.hadoop /tmp/

$ chown -R hadoop.hadoop /home/

1.2.3 赋予Hadoop用户sodu权限

编辑文件,在下添加

$ vi /etc/sudoers

Defaults env_reset

Defaults mail_badpass

Defaults secure_path="/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/snap/bin"

root ALL=(ALL:ALL) ALL

hadoop ALL=(ALL:ALL) ALL

%admin ALL=(ALL) ALL

%sudo ALL=(ALL:ALL) ALL

1.2.4 赋予Hadoop用户登录密码

$ passwd hadoop

Enter new UNIX password: 输入新密码

Retype new UNIX password: 确认新密码

passwd: password updated successfully

1.3 JDK安装

Linux安装JDK可以参照分享的博文《Logstash-数据流引擎》-<第三节:Logstash安装>--(第二小节: 3.2 Linux安装JDK进行)安装配置到每一台主机上,CSDN跳转链接:

1.4 Hadoop官网下载

官网下载:https://hadoop.apache.org/releases.html Binary download

使用 wget 命名下载(下载目录是当前目录):

例如:version3.3.0 https://mirrors.bfsu.edu.cn/apache/hadoop/common/hadoop-3.3.0/hadoop-3.3.0.tar.gz

$ wget https://mirrors.bfsu.edu.cn/apache/hadoop/common/hadoop-3.3.0/hadoop-3.3.0.tar.gz

解压、移动到你想要放置的文件夹:

$ mv ./hadoop-3.3.0.tar.gz /usr/local

$ cd /usr/local

$ tar -zvxf hadoop-3.3.0.tar.gz

1.5 配置Hadoop环境

修改配置文件:

$ vi /etc/profile

# 类同JDK配置添加

export JAVA_HOME=/usr/local/java/jdk1.8.0_152

export JRE_HOME=/usr/local/java/jdk1.8.0_152/jre

export CLASSPATH=$CLASSPATH:$JAVA_HOME/lib:$JAVA_HOME/jre/lib

export HADOOP_HOME=/usr/local/hadoop-3.3.0

export PATH=$JAVA_HOME/bin:$JAVA_HOME/jre/bin:$PATH:$HOME/bin:$HADOOP_HOME/sbin:$HADOOP_HOME/bin

使配置文件生效

$ source /etc/profile

查看Hadoop配置是否成功

$ hadoop version

Hadoop 3.3.0

Source code repository https://gitbox.apache.org/repos/asf/hadoop.git -r aa96f1871bfd858f9bac59cf2a81ec470da649af

Compiled by brahma on 2020-07-06T18:44Z

Compiled with protoc 3.7.1

From source with checksum 5dc29b802d6ccd77b262ef9d04d19c4

This command was run using /usr/local/hadoop-3.3.0/share/hadoop/common/hadoop-common-3.3.0.jar

从结果可以看出,Hadoop版本为 ,说明 Hadoop 环境安装并配置成功。

二 Zookeeper注册中心

Zookeeper注册中心章节主要介绍如下:

Zookeeper介绍

Zookeeper下载安装

Zookeeper配置文件

启动zookeeper集群验证

Zookeeper 集群主机规划:

hadoop1

hadoop2

hadoop3

2.1 Zookeeper介绍

ZooKeeper是一个分布式的,开放源码的分布式应用程序协调服务,是Google的Chubby一个开源的实现,是Hadoop和Hbase的重要组件。它是一个为分布式应用提供一致性服务的软件,提供的功能包括:配置维护、域名服务、分布式同步、组服务等。

ZooKeeper的目标就是封装好复杂易出错的关键服务,将简单易用的接口和性能高效、功能稳定的系统提供给用户,其中包含一个简单的原语集,提供Java和C的接口,ZooKeeper代码版本中,提供了分布式独享锁、选举、队列的接口。其中分布锁和队列有Java和C两个版本,选举只有Java版本。

Zookeeper负责服务的协调调度, 当客户端发起请求时, 返回正确的服务器地址。

2.2 Zookeeper下载安装

Linux(执行主机-hadoop1) 下载 apache-zookeeper-3.5.9-bin 版本包, 移动到安装目录: ,解压并重命名为: :

$ wget https://mirrors.tuna.tsinghua.edu.cn/apache/zookeeper/zookeeper-3.5.9/apache-zookeeper-3.5.9-bin.tar.gz

$ mv apache-zookeeper-3.5.9-bin.tar.gz /usr/local/

$ cd /usr/local/

$ tar -zxvf apache-zookeeper-3.5.9-bin.tar.gz

$ mv apache-zookeeper-3.5.9-bin zookeeper-3.5.9

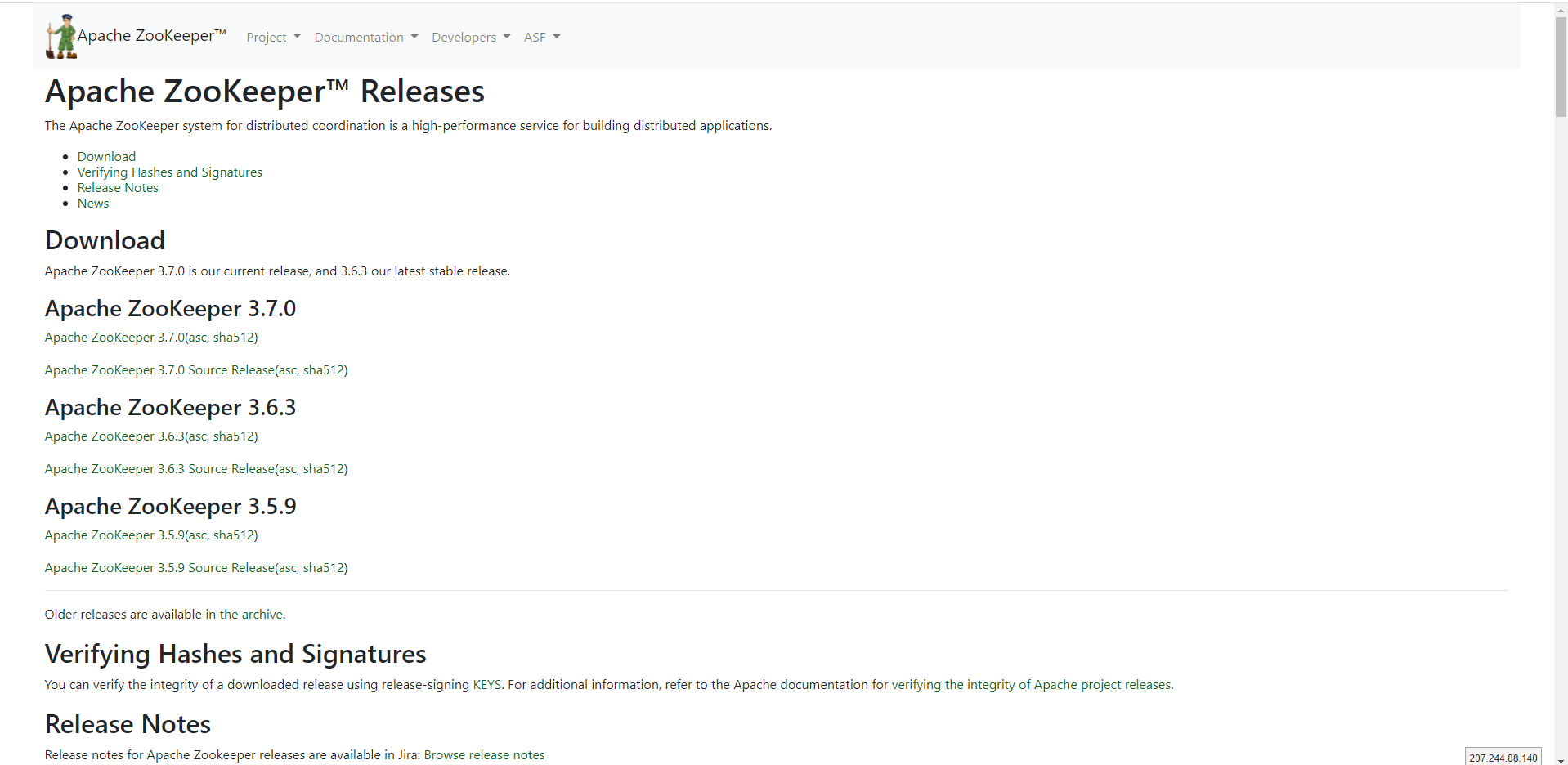

离线安装可以到指定官网下载版本包上传安装,官网地址: http://zookeeper.apache.org/releases.html

如图所示:

2.3 Zookeeper配置文件

2.3.1 配置Zookeeper环境变量

配置Zookeeper环境变量,需要在 配置文件中修改添加,具体配置如下:

export JAVA_HOME=/usr/local/java/jdk1.8.0_152

export CLASSPATH=$CLASSPATH:$JAVA_HOME/lib:$JAVA_HOME/jre/lib

export ZOOKEEPER_HOME=/usr/local/zookeeper-3.5.9

export PATH=$JAVA_HOME/bin:$JAVA_HOME/jre/bin:$PATH:$HOME/bin:$ZOOKEEPER_HOME

修改完成后刷新环境变量配置文件:

$ source /etc/profile

其中已经包含安装了JDK配置,如无安装JDK可以查看Hadoop实战篇(1)中的安装

2.3.2 Zookeeper配置文件

在zookeeper根目录下创建文件夹: 和

$ cd /usr/local/zookeeper-3.5.9

$ mkdir data

$ mkdir dataLog

切换到新建的 data 目录下,创建 myid 文件,添加具体内容为数字1,如下所示:

$ cd /usr/local/zookeeper-3.5.9/data

$ vi myid

# 添加内容数字1

1

$ cat myid

1

进入conf目录中修改配置文件

复制配置文件 并且修改名称为 :

$ cp zoo_sample.cfg zoo.cfg

修改 zoo.cfg 文件,修改内容如下:

tickTime=2000

initLimit=10

syncLimit=5

dataDir=/usr/local/zookeeper-3.5.9/data

dataLogDir=/usr/local/zookeeper-3.5.9/dataLog

clientPort=2181

server.1=hadoop1:2888:3888

server.2=hadoop2:2888:3888

server.3=hadoop3:2888:3888

2.3.3 将Zookeeper和系统环境变量拷贝到其他服务器

根据对服务器的规划,将 Zookeeper 和配置文件 拷贝到 hadoop2 和 hadoop3 主机上:

$ scp -r zookeeper-3.5.9/ root@hadoop2:/usr/local/

$ scp -r zookeeper-3.5.9/ root@hadoop3:/usr/local/

$ scp /etc/profile root@hadoop2:/etc/

$ scp /etc/profile root@hadoop3:/etc/

登陆到 hadoop2 和 hadoop3分别修改 hadoop2 主机和 hadoop3 主机上的配置文件内容: 为 2 和 3

并刷新环境变量配置文件:

$ source /etc/profile

2.4 启动zookeeper集群

在 hadoop1、hadoop2 和 hadoop3 三台服务器上分别启动 Zookeeper 服务器并查看 Zookeeper 运行状态

hadoop1主机:

$ cd /usr/local/zookeeper-3.5.9/bin/

$ ./zkServer.sh start

$ ./zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /usr/local/zookeeper-3.5.9/bin/../conf/zoo.cfg

Client port found: 2181. Client address: localhost. Client SSL: false.

Mode: follower

说明hadoop1主机上 zookeeper 的运行状态是 follower

hadoop2主机:

$ cd /usr/local/zookeeper-3.5.9/bin/

$ ./zkServer.sh start

$ ./zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /usr/local/zookeeper-3.5.9/bin/../conf/zoo.cfg

Client port found: 2181. Client address: localhost. Client SSL: false.

Mode: leader

说明hadoop2主机上 zookeeper 的运行状态是 leader

hadoop3主机:

$ cd /usr/local/zookeeper-3.5.9/bin/

$ ./zkServer.sh start

$ ./zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /usr/local/zookeeper-3.5.9/bin/../conf/zoo.cfg

Client port found: 2181. Client address: localhost. Client SSL: false.

Mode: follower

说明hadoop3主机上 zookeeper 的运行状态是 follower

三 集群模式安装

3.1 Hadoop配置文件修改

3.1.1 修改配置 hadoop-env.sh

主机hadoop1 配置 ,在 hadoop-env.sh 文件中指定 JAVA_HOME 的安装目录: ,配置如下:

export JAVA_HOME=/usr/local/java/jdk1.8.0_152

# 配置允许使用 root 账户权限

export HDFS_DATANODE_USER=root

export HADOOP_SECURE_USER=root

export HDFS_NAMENODE_USER=root

export HDFS_SECONDARYNAMENODE_USER=root

export YARN_RESOURCEMANAGER_USER=root

export YARN_NODEMANAGER_USER=root

export HADOOP_SHELL_EXECNAME=root

export HDFS_JOURNALNODE_USER=root

export HDFS_ZKFC_USER=root

3.1.2 修改配置 core-site.xml

主机hadoop1 配置 ,在 文件中指定 Zookeeper 的集群节点 ,配置如下:

fs.defaultFS

hdfs://ns/

hadoop.tmp.dir

/usr/local/hadoop-3.3.0/tmp

Abase for other temporary directories.

ha.zookeeper.quorum

hadoop1:2181,hadoop2:2181,hadoop3:2181

3.1.3 修改配置 hdfs-site.xml

主机hadoop1 配置 ,在 文件中指定 namenodes 节点, ,配置如下:

dfs.nameservices

ns

dfs.ha.namenodes.ns

nn1,nn2

dfs.namenode.rpc-address.ns.nn1

hadoop1:9000

dfs.namenode.http-address.ns.nn1

hadoop1:9870

dfs.namenode.rpc-address.ns.nn2

hadoop2:9000

dfs.namenode.http-address.ns.nn2

hadoop2:9870

dfs.namenode.shared.edits.dir

qjournal://hadoop5:8485;hadoop6:8485;hadoop7:8485/ns

dfs.journalnode.edits.dir

/usr/local/hadoop-3.3.0/data/journal

dfs.client.failover.proxy.provider.ns

org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider

dfs.ha.fencing.methods

sshfence

dfs.ha.fencing.ssh.private-key-files

/root/.ssh/id_rsa

dfs.ha.fencing.ssh.connect-timeout

30000

dfs.ha.automatic-failover.enabled

true

3.1.4 修改配置 mapred-site.xml

主机hadoop1 配置 ,在 文件中指定 mapreduce 信息, ,配置如下:

mapreduce.framework.name

yarn

yarn.app.mapreduce.am.env

HADOOP_MAPRED_HOME=${HADOOP_HOME}

mapreduce.map.env

HADOOP_MAPRED_HOME=${HADOOP_HOME}

mapreduce.reduce.env

HADOOP_MAPRED_HOME=${HADOOP_HOME}

mapreduce.framework.name

yarn

mapreduce.jobhistory.address

hadoop1:10020

mapreduce.jobhistory.webapp.address

hadoop1:19888

3.1.5 修改配置 yarn-site.xml

主机hadoop1 配置 ,在 文件中指定 ResourceManaeger 节点, ,配置如下:

yarn.resourcemanager.ha.enabled

true

yarn.resourcemanager.cluster-id

yrc

yarn.resourcemanager.ha.rm-ids

rm1,rm2

yarn.resourcemanager.hostname.rm1

hadoop3

yarn.resourcemanager.hostname.rm2

hadoop4

yarn.resourcemanager.address.rm1

hadoop3:8032

yarn.resourcemanager.scheduler.address.rm1

hadoop3:8030

yarn.resourcemanager.webapp.address.rm1

hadoop3:8088

yarn.resourcemanager.resource-tracker.address.rm1

hadoop3:8031

yarn.resourcemanager.admin.address.rm1

hadoop3:8033

yarn.resourcemanager.ha.admin.address.rm1

hadoop3:23142

yarn.resourcemanager.address.rm2

hadoop4:8032

yarn.resourcemanager.scheduler.address.rm2

hadoop4:8030

yarn.resourcemanager.webapp.address.rm2

hadoop4:8088

yarn.resourcemanager.resource-tracker.address.rm2

hadoop4:8031

yarn.resourcemanager.admin.address.rm2

hadoop4:8033

yarn.resourcemanager.ha.admin.address.rm2

hadoop4:23142

yarn.resourcemanager.zk-address

hadoop1:2181,hadoop2:2181,hadoop3:2181

yarn.nodemanager.aux-services

mapreduce_shuffle

mapreduce.map.output.compress

true

3.1.6 修改 workers 文件

主机hadoop1 配置 ,在 文件中指定 DataNode 节点, ,配置如下:

hadoop5

hadoop6

hadoop7

3.2 Hadoop节点拷贝配置

3.2.1 将配置好的Hadoop复制到其他节点

将在 hadoop1 上安装并配置好的 Hadoop 复制到其他服务器上,操作如下所示:

scp -r /usr/local/hadoop-3.3.0/ hadoop2:/usr/local/

scp -r /usr/local/hadoop-3.3.0/ hadoop3:/usr/local/

scp -r /usr/local/hadoop-3.3.0/ hadoop4:/usr/local/

scp -r /usr/local/hadoop-3.3.0/ hadoop5:/usr/local/

scp -r /usr/local/hadoop-3.3.0/ hadoop6:/usr/local/

scp -r /usr/local/hadoop-3.3.0/ hadoop7:/usr/local/

3.2.2 复制 hadoop1 上的系统环境变量

将在 hadoop1 上安装并配置好的系统环境变量复制到其他服务器上,操作如下所示:

sudo scp /etc/profile hadoop2:/etc/

sudo scp /etc/profile hadoop3:/etc/

sudo scp /etc/profile hadoop4:/etc/

sudo scp /etc/profile hadoop5:/etc/

sudo scp /etc/profile hadoop6:/etc/

sudo scp /etc/profile hadoop7:/etc/

使系统环境变量生效

source /etc/profile

hadoop version

3.3 启动 hadoop 集群(1)

启动 hadoop 集群步骤分为:

启动并验证 journalnode 进程

格式化HDFS

格式化ZKFC

启动并验证 NameNode 进程

同步元数据信息

启动并验证备用NameNode 进程

启动并验证DataNode进程

启动并验证YARN

启动并验证ZKFC

查看每台服务器上运行的运行信息

3.3.1 启动并验证 journalnode 进程

(1)启动 journalnode 进程,在hadoop1服务器上执行如下命令,启动 journalnode 进程:

hdfs --workers --daemon start journalnode

(2)验证 journalnode 进程是否启动成功,分别在hadoop5、hadoop6和hadoop7三台服务器上分别执行 命令,执行结果如下

hadoop5服务器:

root@hadoop5:~# jps

17322 Jps

14939 JournalNode

hadoop6服务器:

root@hadoop6:~# jps

13577 JournalNode

15407 Jps

hadoop7服务器:

root@hadoop7:~# jps

13412 JournalNode

15212 Jps

3.3.2 格式化HDFS

在hadoop1服务器上执行如下命令,格式化HDFS:

hdfs namenode -format

执行后,命令行会输出成功信息:

common.Storage: Storage directory /usr/local/hadoop-3.3.0/tmp/dfs/name has been successfully formatted.

3.3.3 格式化ZKFC

在hadoop1服务器上执行如下命令,格式化 ZKFC :

hdfs zkfc -formatZK

执行后,命令行会输出成功信息:

ha.ActiveStandbyElector: Successfuly created /hadoop-ha/ns in ZK.

3.3.4 启动并验证 NameNode 进程

启动NameNode进程,在hadoop1服务器上执行

hdfs --daemon start namenode

验证NameNode进程启动成功,

# jps

26721 NameNode

50317 Jps

3.3.5 同步元数据信息

在hadoop2服务器上执行如下命令,进行元数据信息的同步操作:

hdfs namenode -bootstrapStandby

执行后,命令行成功信息:

common.Storage: Storage directory /usr/local/hadoop-3.3.0/tmp/dfs/name has been successfully formatted.

3.3.6 启动并验证备用NameNode进程

在hadoop2服务器上执行如下命令,启动备用NameNode 进程:

hdfs --daemon start namenode

验证备用的NameNode 进程

# jps

21482 NameNode

50317 Jps

3.3.7 启动并验证DataNode进程

在hadoop1服务器上执行如下命令,启动DataNode进程

hdfs --workers --daemon start datanode

验证DataNode进程在hadoop5、hadoop6和hadoop7服务器上执行:

hadoop5服务器:

# jps

31713 Jps

16435 DataNode

14939 JournalNode

15406 NodeManager

hadoop6服务器:

# jps

13744 NodeManager

13577 JournalNode

29806 Jps

14526 DataNode

hadoop7服务器:

# jps

29188 Jps

14324 DataNode

13412 JournalNode

13580 NodeManager

3.3.8 启动并验证YARN

在hadoop1服务器上执行如下命令,启动YARN

start-yarn.sh

在hadoop3、hadoop4服务器上执行jps命令,验证YARN启动成功

hadoop3服务器:

# jps

21937 Jps

8070 ResourceManager

7430 QuorumPeerMain

hadoop4服务器:

# jps

6000 ResourceManager

20183 Jps

3.3.9 启动并验证ZKFC

在hadoop1服务器上执行如下命令,启动ZKFC:

hdfs --workers daemon start zkfc

在hadoop1和hadoop2上执行jps命令,验证DFSZKFailoveController进程启动成功

hadoop1服务器:

# jps

26721 NameNode

14851 QuorumPeerMain

50563 Jps

27336 DFSZKFailoverController

hadoop2服务器:

# jps

21825 DFSZKFailoverController

39399 Jps

15832 QuorumPeerMain

21482 NameNode

3.3.10 查看每台服务器上运行的运行信息

hadoop1服务器:

# jps

26721 NameNode

14851 QuorumPeerMain

50563 Jps

27336 DFSZKFailoverController

hadoop2服务器:

# jps

21825 DFSZKFailoverController

39399 Jps

15832 QuorumPeerMain

21482 NameNode

hadoop3服务器:

# jps

8070 ResourceManager

7430 QuorumPeerMain

21950 Jps

hadoop4服务器:

# jps

6000 ResourceManager

20197 Jps

hadoop5服务器:

# jps

16435 DataNode

31735 Jps

14939 JournalNode

15406 NodeManager

hadoop6服务器:

# jps

13744 NodeManager

13577 JournalNode

29833 Jps

14526 DataNode

hadoop7服务器:

# jps

14324 DataNode

13412 JournalNode

29211 Jps

13580 NodeManager

3.4 启动 hadoop 集群(2)

格式化HDFS

复制元数据信息

格式化ZKFC

启动HDFS

启动YARN

查看每台服务器上运行的运行信息

3.4.1 格式化HDFS

在hadoop1服务器上格式化HDFS,如下所示:

hdfs namenode -format

3.4.2 复制元数据信息

将hadoop1服务器上的 目录复制到hadoop2服务器上 目录下,在hadoop1服务器上执行如下命令:

scp -r /usr/local/hadoop-3.3.0/tmp hadoop2:/usr/local/hadoop-3.3.0/

3.4.3 格式化ZKFC

在hadoop1服务器上格式化ZKFC,如下所示:

hdfs zkfc -formatZK

3.4.4 启动HDFS

在hadoop1服务器上通过启动脚本启动HDFS,如下所示:

start-dfs.sh

3.4.5 启动YARN

在hadoop1服务器上通过启动脚本启动YARN,如下所示:

start-yarn.sh

3.4.6 查看每台服务器上运行的运行信息

hadoop1服务器:

# jps

26721 NameNode

14851 QuorumPeerMain

50563 Jps

27336 DFSZKFailoverController

hadoop2服务器:

# jps

21825 DFSZKFailoverController

39399 Jps

15832 QuorumPeerMain

21482 NameNode

hadoop3服务器:

# jps

8070 ResourceManager

7430 QuorumPeerMain

21950 Jps

hadoop4服务器:

# jps

6000 ResourceManager

20197 Jps

hadoop5服务器:

# jps

16435 DataNode

31735 Jps

14939 JournalNode

15406 NodeManager

hadoop6服务器:

# jps

13744 NodeManager

13577 JournalNode

29833 Jps

14526 DataNode

hadoop7服务器:

# jps

14324 DataNode

13412 JournalNode

29211 Jps

13580 NodeManager

四 Hadoop 的目录结构说明和命令帮助文档

4.1 Hadoop 的目录结构说明

使用命令“ls”查看Hadoop 3.3.0下面的目录,如下所示:

-bash-4.1$ ls

bin etc include lib libexec LICENSE.txt NOTICE.txt README.txt sbin share

下面就简单介绍下每个目录的作用:

bin:bin目录是Hadoop最基本的管理脚本和使用脚本所在的目录,这些脚本是sbin目录下管理脚本的基础实现,用户可以直接使用这些脚本管理和使用Hadoop

etc:Hadoop配置文件所在的目录,包括:core-site.xml、hdfs-site.xml、mapred-site.xml和yarn-site.xml等配置文件。

include:对外提供的编程库头文件(具体的动态库和静态库在lib目录中),这些文件都是用C++定义的,通常用于C++程序访问HDFS或者编写MapReduce程序。

lib:包含了Hadoop对外提供的编程动态库和静态库,与include目录中的头文件结合使用。

libexec:各个服务对应的shell配置文件所在的目录,可用于配置日志输出目录、启动参数(比如JVM参数)等基本信息。

sbin:Hadoop管理脚本所在目录,主要包含HDFS和YARN中各类服务启动/关闭的脚本。

share:Hadoop各个模块编译后的Jar包所在目录,这个目录中也包含了Hadoop文档。

4.2 Hadoop 命令帮助文档

1、查看指定目录下内容

hdfs dfs –ls [文件目录]

hdfs dfs -ls -R / //显式目录结构

eg: hdfs dfs –ls /user/wangkai.pt

2、打开某个已存在文件

hdfs dfs –cat [file_path]

eg:hdfs dfs -cat /user/wangkai.pt/data.txt

3、将本地文件存储至hadoop

hdfs dfs –put [本地地址] [hadoop目录]

hdfs dfs –put /home/t/file.txt /user/t

4、将本地文件夹存储至hadoop

hdfs dfs –put [本地目录] [hadoop目录]

hdfs dfs –put /home/t/dir_name /user/t

(dir_name是文件夹名)

5、将hadoop上某个文件down至本地已有目录下

hadoop dfs -get [文件目录] [本地目录]

hadoop dfs –get /user/t/ok.txt /home/t

6、删除hadoop上指定文件

hdfs dfs –rm [文件地址]

hdfs dfs –rm /user/t/ok.txt

7、删除hadoop上指定文件夹(包含子目录等)

hdfs dfs –rm [目录地址]

hdfs dfs –rmr /user/t

8、在hadoop指定目录内创建新目录

hdfs dfs –mkdir /user/t

hdfs dfs -mkdir - p /user/centos/hadoop

9、在hadoop指定目录下新建一个空文件

使用touchz命令:

hdfs dfs -touchz /user/new.txt

10、将hadoop上某个文件重命名

使用mv命令:

hdfs dfs –mv /user/test.txt /user/ok.txt (将test.txt重命名为ok.txt)

11、将hadoop指定目录下所有内容保存为一个文件,同时down至本地

hdfs dfs –getmerge /user /home/t

12、将正在运行的hadoop作业kill掉

hadoop job –kill [job-id]

13.查看帮助

hdfs dfs -help

五 集群动态增加和删除节点

5.1 动态添加 DataNode 和 NodeManager

5.1.1 查看集群的状态

在hadoop1服务器上查看HDFS各节点状态,如下所示:

# hdfs dfsadmin -report

Configured Capacity: 60028796928 (55.91 GB)

Present Capacity: 45182173184 (42.08 GB)

DFS Remaining: 45178265600 (42.08 GB)

DFS Used: 3907584 (3.73 MB)

DFS Used%: 0.01%

Replicated Blocks:

Under replicated blocks: 0

Blocks with corrupt replicas: 0

Missing blocks: 0

Missing blocks (with replication factor 1): 0

Low redundancy blocks with highest priority to recover: 0

Pending deletion blocks: 0

Erasure Coded Block Groups:

Low redundancy block groups: 0

Block groups with corrupt internal blocks: 0

Missing block groups: 0

Low redundancy blocks with highest priority to recover: 0

Pending deletion blocks: 0

-------------------------------------------------

Live datanodes (3):

Name: 192.168.254.134:9866 (hadoop5)

Hostname: hadoop5

Decommission Status : Normal

Configured Capacity: 20009598976 (18.64 GB)

DFS Used: 1302528 (1.24 MB)

Non DFS Used: 4072615936 (3.79 GB)

DFS Remaining: 15060099072 (14.03 GB)

DFS Used%: 0.01%

DFS Remaining%: 75.26%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Thu Nov 18 14:23:05 CST 2021

Last Block Report: Thu Nov 18 13:42:32 CST 2021

Num of Blocks: 16

Name: 192.168.254.135:9866 (hadoop6)

Hostname: hadoop6

Decommission Status : Normal

Configured Capacity: 20009598976 (18.64 GB)

DFS Used: 1302528 (1.24 MB)

Non DFS Used: 4082216960 (3.80 GB)

DFS Remaining: 15050498048 (14.02 GB)

DFS Used%: 0.01%

DFS Remaining%: 75.22%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Thu Nov 18 14:23:06 CST 2021

Last Block Report: Thu Nov 18 08:58:22 CST 2021

Num of Blocks: 16

Name: 192.168.254.136:9866 (hadoop7)

Hostname: hadoop7

Decommission Status : Normal

Configured Capacity: 20009598976 (18.64 GB)

DFS Used: 1302528 (1.24 MB)

Non DFS Used: 4065046528 (3.79 GB)

DFS Remaining: 15067668480 (14.03 GB)

DFS Used%: 0.01%

DFS Remaining%: 75.30%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Thu Nov 18 14:23:05 CST 2021

Last Block Report: Thu Nov 18 14:09:59 CST 2021

Num of Blocks: 16

可以看到,添加DataNode之前,DataNode总共有3个,分别在hadoop5、hadoop6和hadoop7服务器上

查看YARN各节点的状态

# yarn node -list

Total Nodes:3

Node-Id Node-State Node-Http-Address Number-of-Running-Containers

hadoop5:34211 RUNNING hadoop5:8042 0

hadoop7:43419 RUNNING hadoop7:8042 0

hadoop6:36501 RUNNING hadoop6:8042 0

可以看到,添加NodeManager之前,NodeManger 进程运行在hadoop5、hadoop6和hadoop7服务器上

5.1.2 动态添加 DataNode 和 NodeManager

在hadoop集群所有节点中的workers文件中新增hadoop4节点,当前修改主机hadoop1:

# vi /usr/local/hadoop-3.3.0/etc/hadoop/workers

hadoop4

hadoop5

hadoop6

hadoop7

将修改的文件拷贝到其他节点上

# scp /usr/local/hadoop-3.3.0/etc/hadoop/workers hadoop2:/usr/local/hadoop-3.3.0/etc/hadoop/

# scp /usr/local/hadoop-3.3.0/etc/hadoop/workers hadoop3:/usr/local/hadoop-3.3.0/etc/hadoop/

# scp /usr/local/hadoop-3.3.0/etc/hadoop/workers hadoop4:/usr/local/hadoop-3.3.0/etc/hadoop/

# scp /usr/local/hadoop-3.3.0/etc/hadoop/workers hadoop5:/usr/local/hadoop-3.3.0/etc/hadoop/

# scp /usr/local/hadoop-3.3.0/etc/hadoop/workers hadoop6:/usr/local/hadoop-3.3.0/etc/hadoop/

# scp /usr/local/hadoop-3.3.0/etc/hadoop/workers hadoop7:/usr/local/hadoop-3.3.0/etc/hadoop/

启动hadoop4服务器上DataNode和NodeManager,如下所示:

# hdfs --daemon start datanode

# yarn --daemin start nodemanager

刷新节点,在hadoop1服务器上执行如下命令,刷新Hadoop集群节点:

# hdfs dfsadmin -refreshNodes

# start-balancer.sh

查看hadoop4节点上的运行进程:

# jps

20768 NodeManager

6000 ResourceManager

20465 DataNode

20910 Jps

5.1.3 再次查看集群的状态

在hadoop1服务器上查看HDFS各节点状态,如下所示:

# hdfs dfsadmin -report

Configured Capacity: 80038395904 (74.54 GB)

Present Capacity: 60257288192 (56.12 GB)

DFS Remaining: 60253356032 (56.12 GB)

DFS Used: 3932160 (3.75 MB)

DFS Used%: 0.01%

Replicated Blocks:

Under replicated blocks: 0

Blocks with corrupt replicas: 0

Missing blocks: 0

Missing blocks (with replication factor 1): 0

Low redundancy blocks with highest priority to recover: 0

Pending deletion blocks: 0

Erasure Coded Block Groups:

Low redundancy block groups: 0

Block groups with corrupt internal blocks: 0

Missing block groups: 0

Low redundancy blocks with highest priority to recover: 0

Pending deletion blocks: 0

-------------------------------------------------

Live datanodes (4):

Name: 192.168.254.133:9866 (hadoop4)

Hostname: hadoop4

Decommission Status : Normal

Configured Capacity: 20009598976 (18.64 GB)

DFS Used: 24576 (24 KB)

Non DFS Used: 4058525696 (3.78 GB)

DFS Remaining: 15075467264 (14.04 GB)

DFS Used%: 0.00%

DFS Remaining%: 75.34%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Thu Nov 18 15:12:30 CST 2021

Last Block Report: Thu Nov 18 15:10:49 CST 2021

Num of Blocks: 0

Name: 192.168.254.134:9866 (hadoop5)

Hostname: hadoop5

Decommission Status : Normal

Configured Capacity: 20009598976 (18.64 GB)

DFS Used: 1302528 (1.24 MB)

Non DFS Used: 4072738816 (3.79 GB)

DFS Remaining: 15059976192 (14.03 GB)

DFS Used%: 0.01%

DFS Remaining%: 75.26%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Thu Nov 18 15:12:33 CST 2021

Last Block Report: Thu Nov 18 13:42:32 CST 2021

Num of Blocks: 16

Name: 192.168.254.135:9866 (hadoop6)

Hostname: hadoop6

Decommission Status : Normal

Configured Capacity: 20009598976 (18.64 GB)

DFS Used: 1302528 (1.24 MB)

Non DFS Used: 4082335744 (3.80 GB)

DFS Remaining: 15050379264 (14.02 GB)

DFS Used%: 0.01%

DFS Remaining%: 75.22%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Thu Nov 18 15:12:31 CST 2021

Last Block Report: Thu Nov 18 14:58:22 CST 2021

Num of Blocks: 16

Name: 192.168.254.136:9866 (hadoop7)

Hostname: hadoop7

Decommission Status : Normal

Configured Capacity: 20009598976 (18.64 GB)

DFS Used: 1302528 (1.24 MB)

Non DFS Used: 4065181696 (3.79 GB)

DFS Remaining: 15067533312 (14.03 GB)

DFS Used%: 0.01%

DFS Remaining%: 75.30%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Thu Nov 18 15:12:33 CST 2021

Last Block Report: Thu Nov 18 14:09:59 CST 2021

Num of Blocks: 16

可以看到,添加DataNode之前,DataNode总共有3个,分别在hadoop4、hadoop5、hadoop6和hadoop7服务器上

查看YARN各节点的状态

# yarn node -list

Total Nodes:4

Node-Id Node-State Node-Http-Address Number-of-Running-Containers

hadoop5:34211 RUNNING hadoop5:8042 0

hadoop4:36431 RUNNING hadoop4:8042 0

hadoop7:43419 RUNNING hadoop7:8042 0

hadoop6:36501 RUNNING hadoop6:8042 0

5.2 动态删除DataNode和NodeManager

5.2.1 删除DataNode和NodeManager

停止hadoop4上面的DataNode和NodeManager进程,在hadoop4上执行

# hdfs --daemon stop datanode

# yarn --daemon stop nodemanager

删除hadoop集群每台主机的workers文件中的hadoop4配置信息

# vi /usr/local/hadoop-3.3.0/etc/hadoop/workers

hadoop5

hadoop6

hadoop7

刷新节点,在hadoop1服务器上执行如下命令,刷新hadoop集群节点:

# hdfs dfsadmin -refreshNodes # start-balancer.sh

参考文档:

[1] 逸非羽.CSDN: https://www.cnblogs.com/yifeiyu/p/11044290.html ,2019-06-18.

[2] Hadoop官网: https://hadoop.apache.org/

[3] 冰河.海量数据处理与大数据技术实站 [M].第1版.北京: 北京大学出版社,2020-09